Embodied reasoning—the ability to ground perception, language, and action in the physical world—is becoming

central to robotic manipulation. Foundation models (LLMs, VLMs, and 3D vision models) bring broad world knowledge

and compositional generalization, yet turning these capabilities into reliable, closed‑loop manipulation remains

an open challenge: robots must reason about spatial relations, affordances, dynamics, and causality while adapting

online to novel scenes, objects, and tasks. This workshop convenes researchers across robotics, embodied AI,

perception, planning, control, simulation, and sim‑to‑real to chart the next steps in reasoning‑centric manipulation.

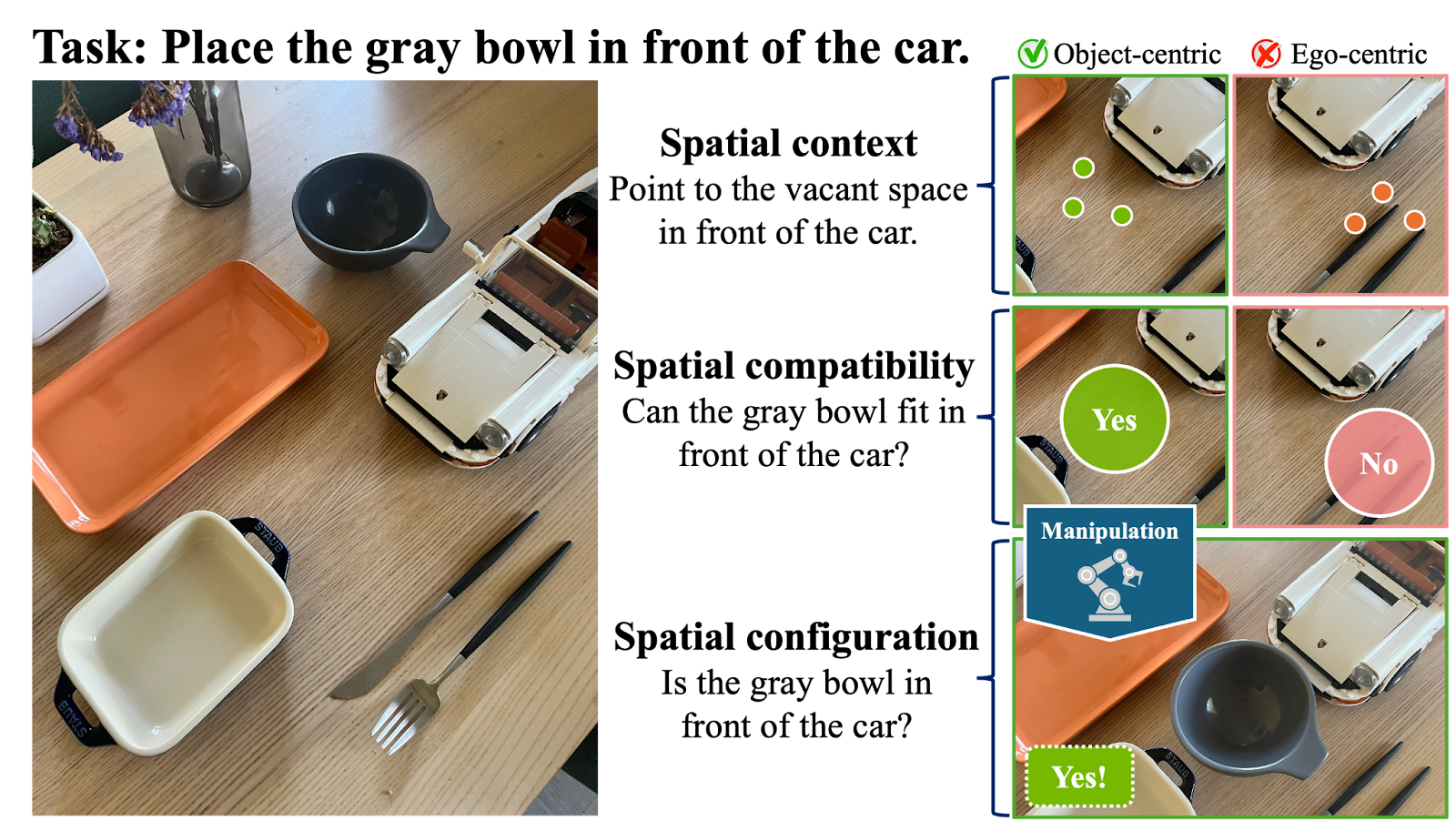

We introduce two community challenges to benchmark progress: RoboSpatial, evaluates a model's ability to understand

spatial relationships—where to point, fit, and place—across different camera views and scenes, and PointArena (Point‑Bench),

Measures precise, language‑guided pointing by asking models to select the correct pixel(s) in an image with automated scoring.

Together, these tracks measure generalization across viewpoints, sensors, and object sets; robustness to environmental

perturbations; and the fidelity of language‑to‑action grounding.

By bringing together diverse perspectives—from algorithmic foundations and datasets to hardware‑aware systems and

deployments—we aim to identify what forms of representation, learning, and planning truly scale embodied reasoning

for real‑world manipulation, and to catalyze collaboration between academia and industry.

We are excited to announce the challenges for the ERA workshop.

RoboSpatial evaluates a model’s embodied spatial reasoning by testing its ability to infer where to point, fit, and place from real-world RGB-D observations. It contains 350 spatial question–answer pairs collected across five real apartments, with both RGB and depth data captured using an iPhone 13 Pro Max. Each scene is annotated under three reasoning types: configuration (object relations), context (free-space reasoning), and compatibility (affordance and fit). By assessing how models interpret 3D spatial structure and generalize across diverse indoor scenes and viewpoints, RoboSpatial provides a diagnostic benchmark for grounding perception and language in actionable spatial understanding, and is increasingly adopted by frontier models such as Qwen3-VL and Gemini Robotics for evaluating embodied spatial reasoning.

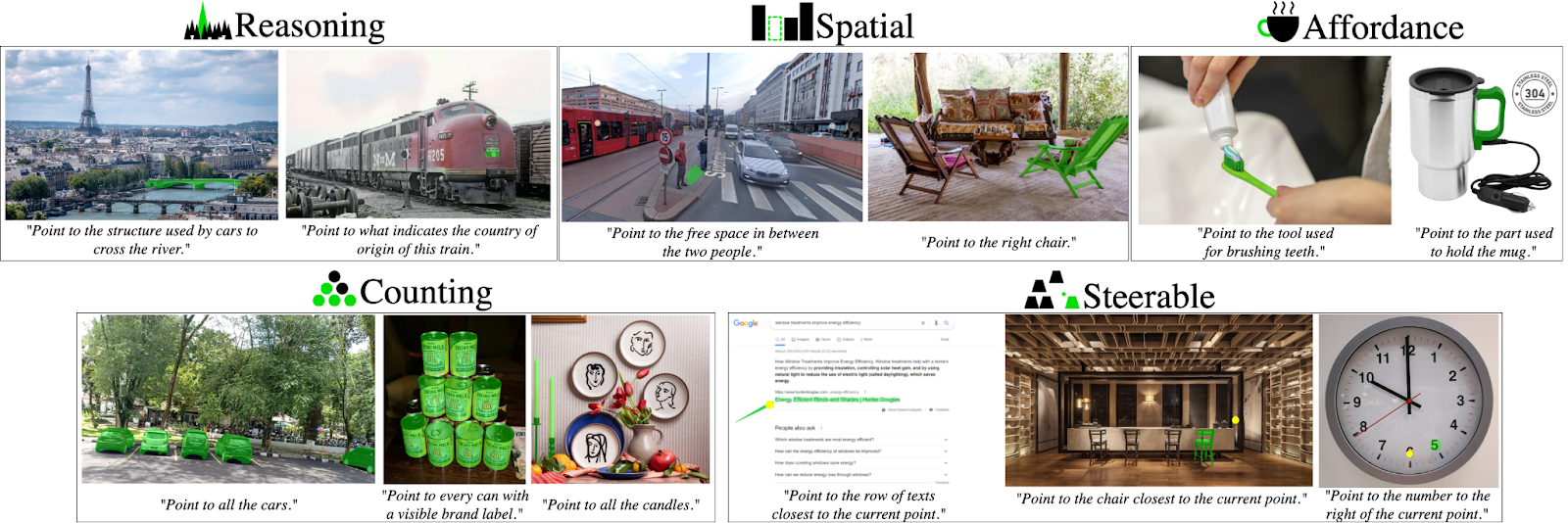

PointArena is the largest benchmark for evaluating language-guided pointing, comprising 982 text-image pairs with pixel-level target masks collected from public sources after April 20, 2025. The dataset is evenly divided into five task-driven categories—Spatial, Affordance, Counting, Steerable, and Reasoning—derived from a survey of question types frequently tackled by open-source MLLMs. Each category targets a distinct capability: 1) Spatial focuses on positional queries within scenes rich in spatial relationships or repeated objects (e.g., “Point to the leftmost tree in the image”); 2) Affordance emphasizes functional parts of objects, typically in tabletop scenes, prompting queries like “Point to the handle used for pouring”; 3) Counting features multiple similar items and supports queries about subsets based on number or attributes, such as “Point to all the blue cars in the image”; 4) Steerable leverages images from the PixMo dataset that include a reference point, guiding annotators to ask relative-position questions like “Point to the item closest to the marked point”; and 5) Reasoning presents event-rich or abstract scenes, inviting open-ended queries that require inference, such as “Point to the tallest man-made object in the image.”

| Event | Date |

|---|---|

| Challenge Launch | January 2026 (following workshop acceptance) |

| Simulation Phase Deadline | April 30, 2026 |

| Verification Period | May 2026 |

| Winner Notifications | End of May 2026 |

Evaluation. The two challenges will be evaluated independently. The primary metric is the average success rate across all tasks. Each challenge includes a public test split, which has already been released with the benchmark. Because participants can self-evaluate and post results to the leaderboards for both benchmarks, we will require submission of model checkpoints for verification. To minimize leakage of test information, each team will be limited to a small number of submissions.

Prizes. Final rankings will be determined by benchmark performance results. Prizes will be awarded to the winners, contingent on sponsor availability.

We will consider having posters from the challenge participants and invited posters from the main conference papers that are relevant, but will not hold a paper submission.

| Start Time (MDT) | End Time (MDT) | Event |

|---|---|---|

| 9:00 AM | 9:10 AM | Introduction and welcome |

| 9:10 AM | 9:45 AM | Invited speaker: Furong Huang |

| 9:45 AM | 10:20 AM | Invited speaker: Tianmin Shu |

| 10:20 AM | 10:55 AM | Invited speaker: Ranjay Krishna |

| 10:55 AM | 11:10 AM | Coffee break (Tentative Poster Session) |

| 11:10 AM | 11:45 AM | Invited speaker: Cheng Chi |

| 11:45 AM | 12:20 PM | Challenge overview and results summary |

| 12:20 PM | 12:30 PM | Winner and runner-up presentations for RoboSpatial and Point-Bench challenges |

| 12:30 PM | 2:00 PM | Lunch |

| 2:00 PM | 2:35 PM | Spotlight Presentation (15mins each) |

| 2:35 PM | 3:10 PM | Invited speaker: Jiajun Wu |

| 3:10 PM | 3:25 PM | Invited speaker: Karl Pertsch |

| 3:25 PM | 4:00 PM | Invited speaker: Yilun Du |

| 4:00 PM | 4:45 PM | Panel Discussion |

| 4:45 PM | 5:00 PM | Award ceremony and closing remark |

listed alphabetically